2025-05-14 ODSC East

What are we talking about?

- What is LlamaIndex

- What is an Agent?

- Agent Patterns in LlamaIndex

- Chaining

- Routing

- Parallelization

- Orchestrator-Workers

- Evaluator-Optimizer

- Giving Agents access to data

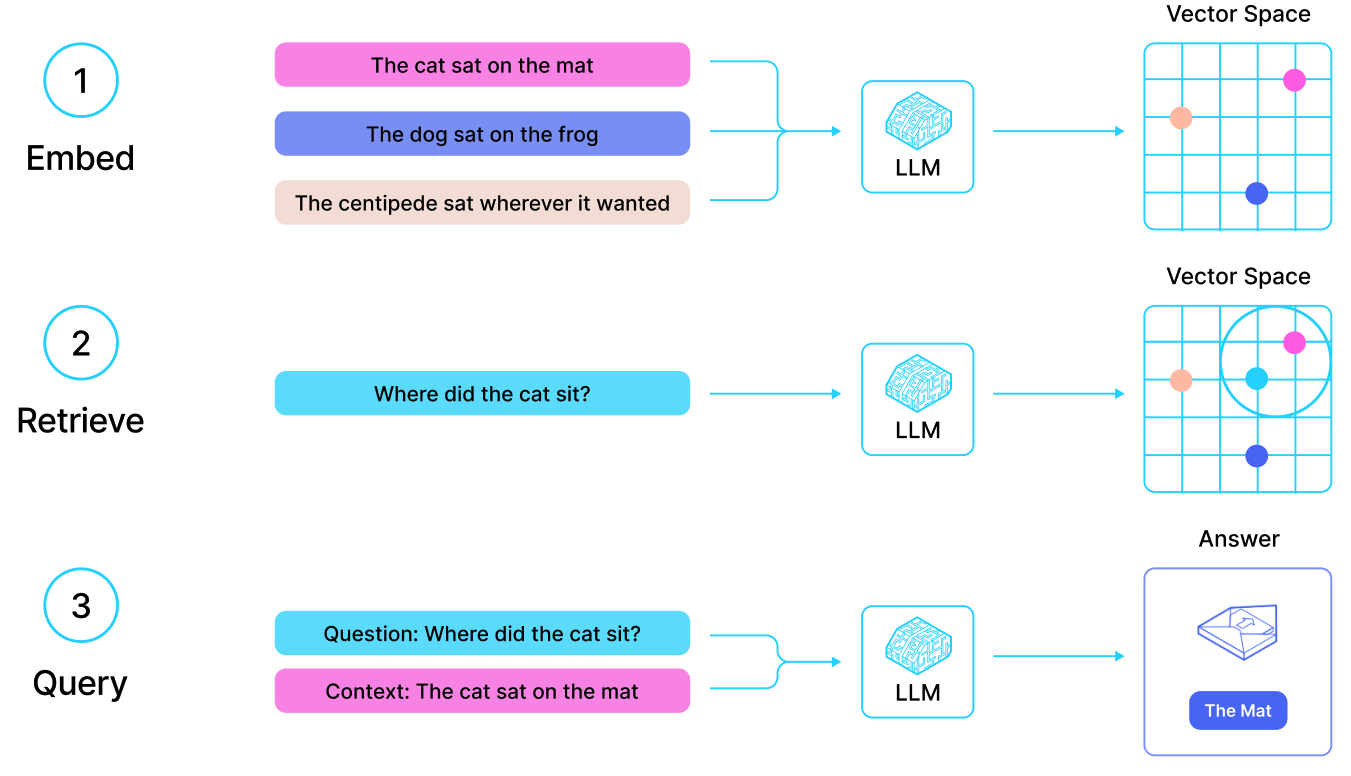

- How RAG works

What is LlamaIndex?

Python: docs.llamaindex.ai

TypeScript: ts.llamaindex.ai

LlamaParse

World's best parser of complex documents

Free for 1000 pages/day!

cloud.llamaindex.ai

LlamaCloud

Turn-key RAG API for Enterprises

Available as SaaS or private cloud deployment

Sign up at cloud.llamaindex.ai

LlamaHub

Why LlamaIndex?

- Build faster

- Skip the boilerplate

- Avoid early pitfalls

- Get best practices for free

- Go from prototype to production

What can you build in LlamaIndex?

- Lots of stuff! Especially...

- AI agents

- RAG

What is an agent?

Semi-autonomous software

that uses tools to accomplish a goal

Agentic programming is a new paradigm

When does an agent make sense?

When your data is messy, which is most of the time

LLMs are good at turning lots of text into less text

LLMs work well under the hood

You don't have to build a chatbot

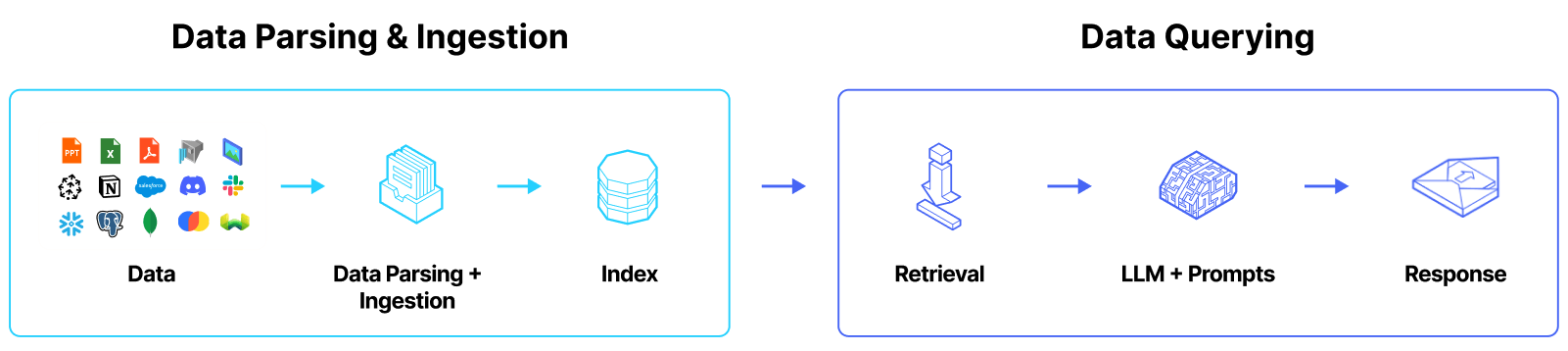

LLMs need data

The solution is RAG

RAG = infinite context

Agents need RAG

and RAG needs agents

Building Effective Agents

- Chaining

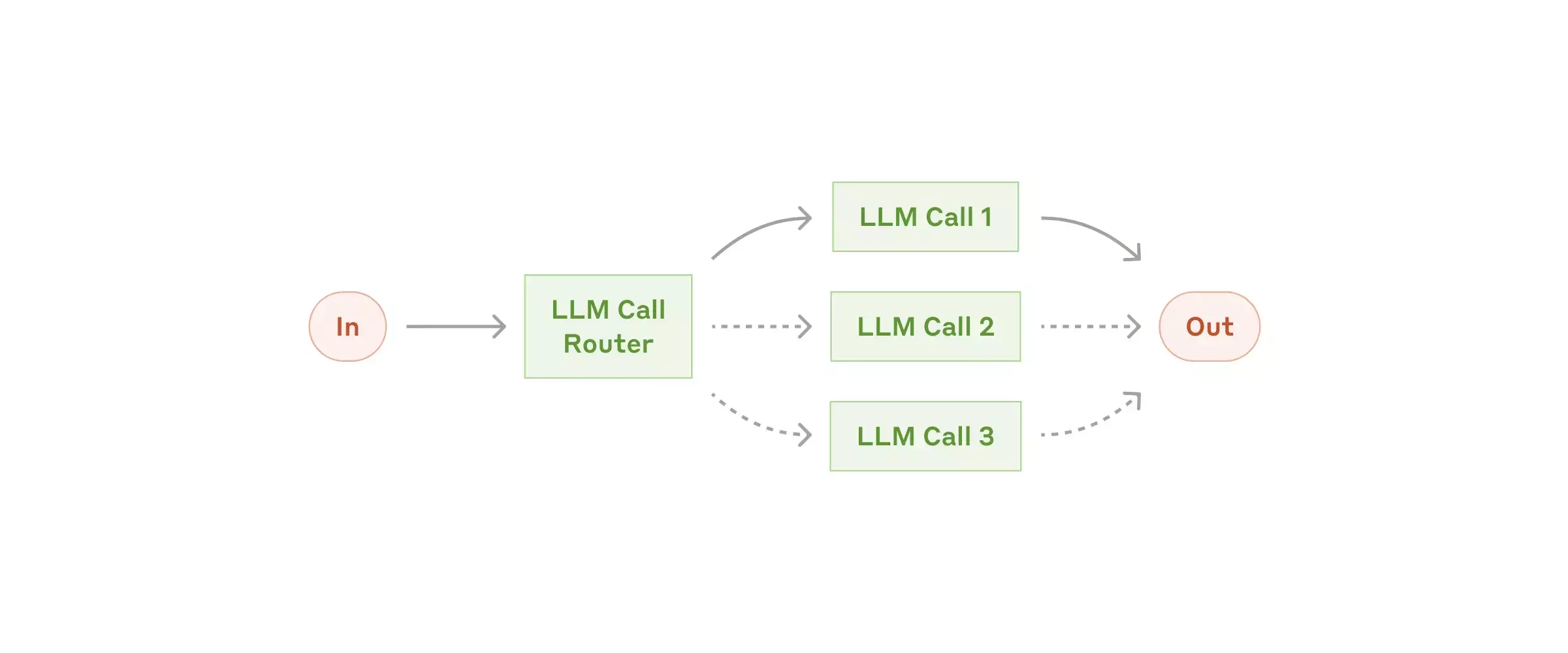

- Routing

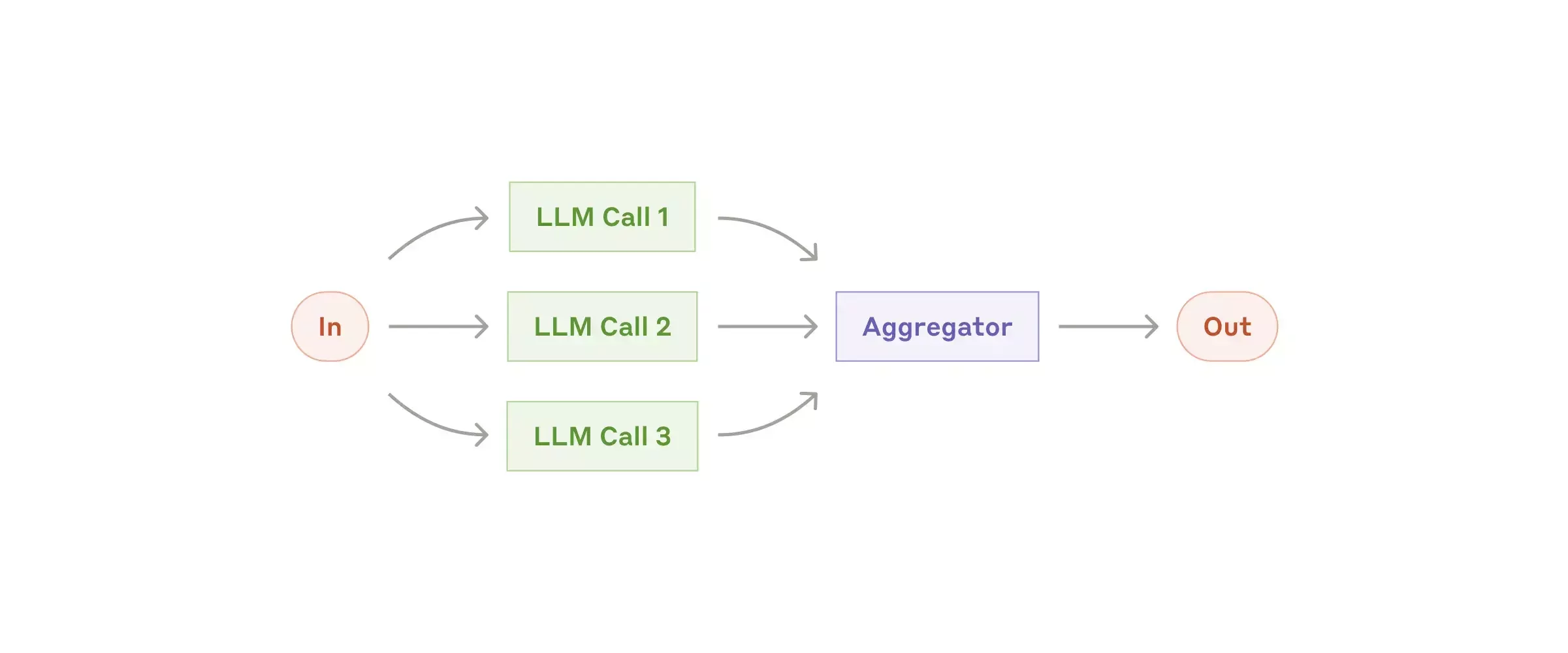

- Parallelization

- Orchestrator-Workers

- Evaluator-Optimizer

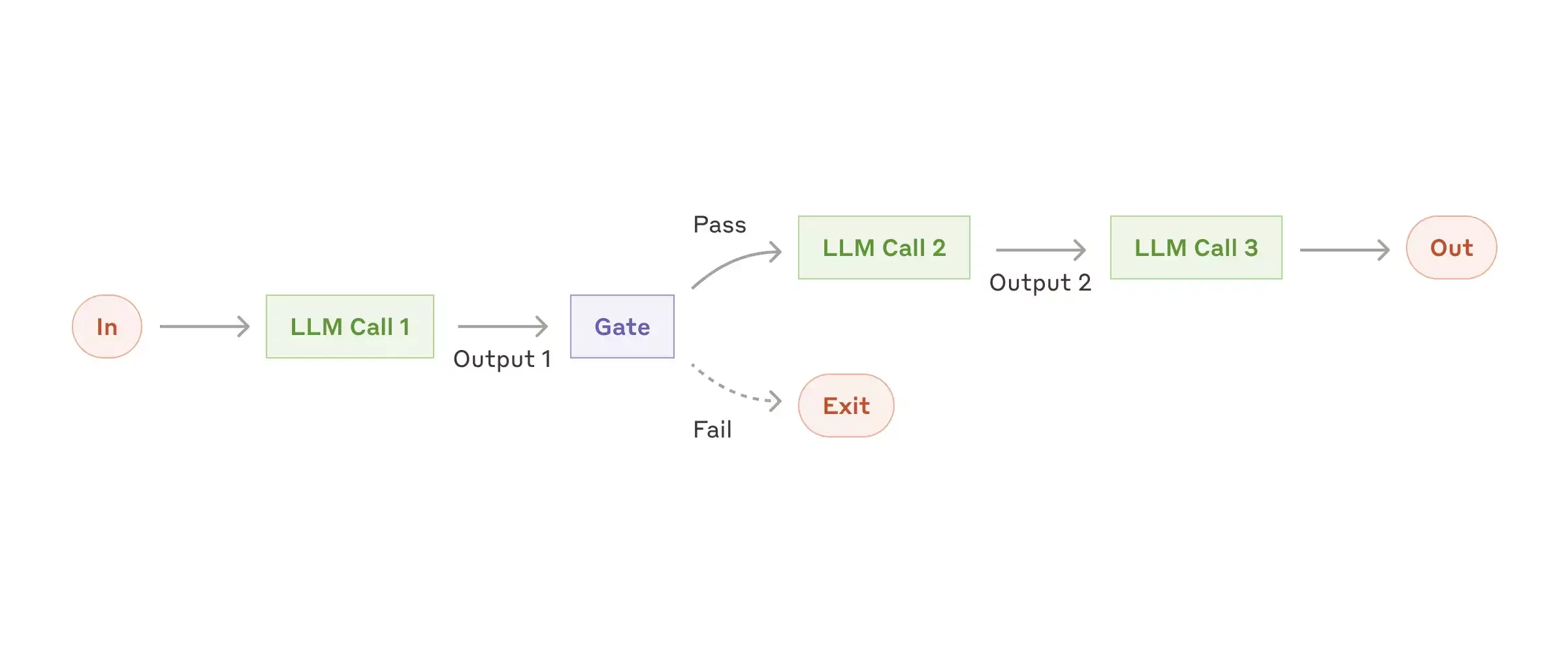

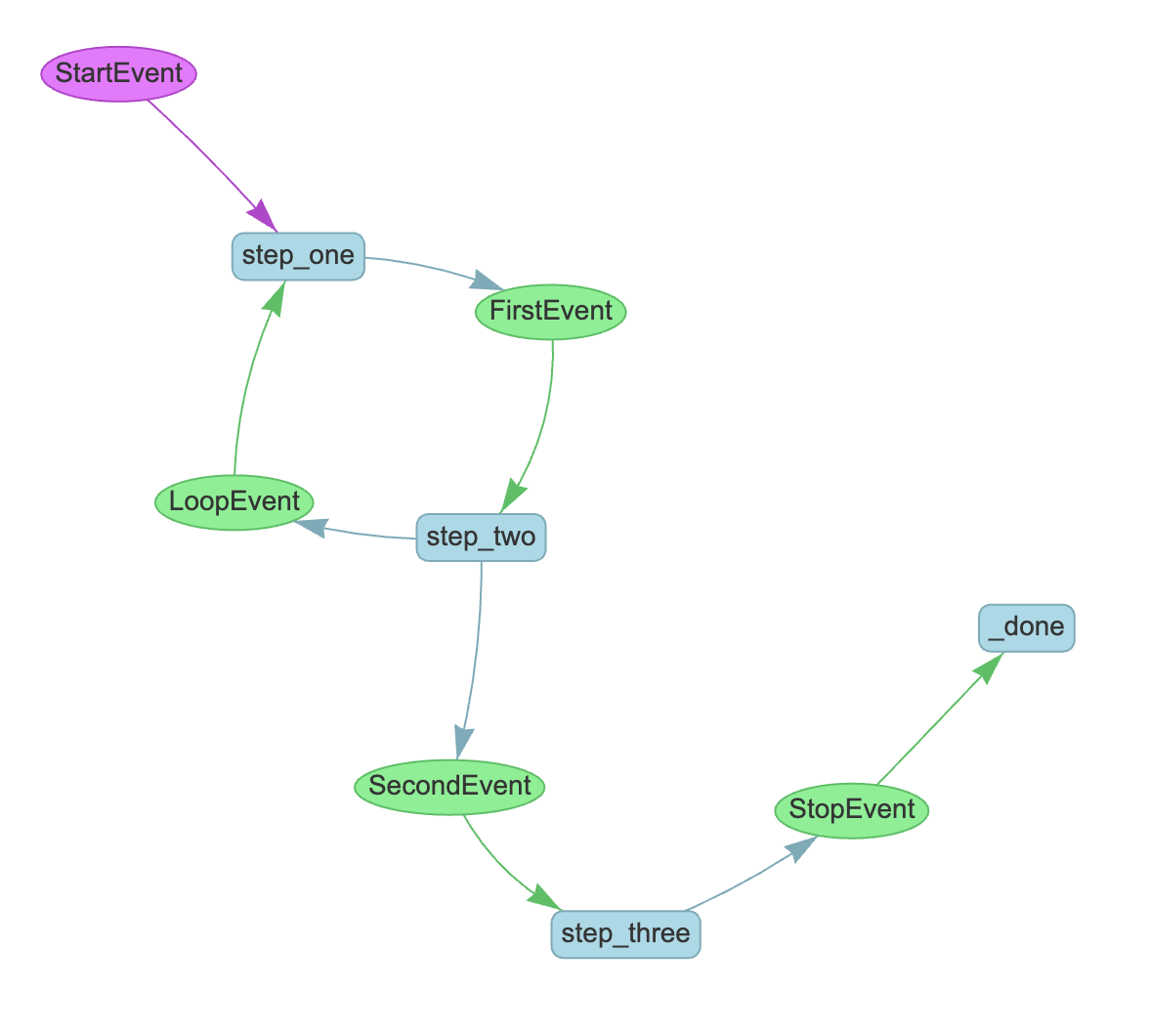

Chaining

Workflows

class MyWorkflow(Workflow):

@step

async def step_one(self, ev: StartEvent) -> FirstEvent:

print(ev.first_input)

return FirstEvent(first_output="First step complete.")

@step

async def step_two(self, ev: FirstEvent) -> SecondEvent:

print(ev.first_output)

return SecondEvent(second_output="Second step complete.")

@step

async def step_three(self, ev: SecondEvent) -> StopEvent:

print(ev.second_output)

return StopEvent(result="Workflow complete.")

Workflow visualization

Routing

Branching

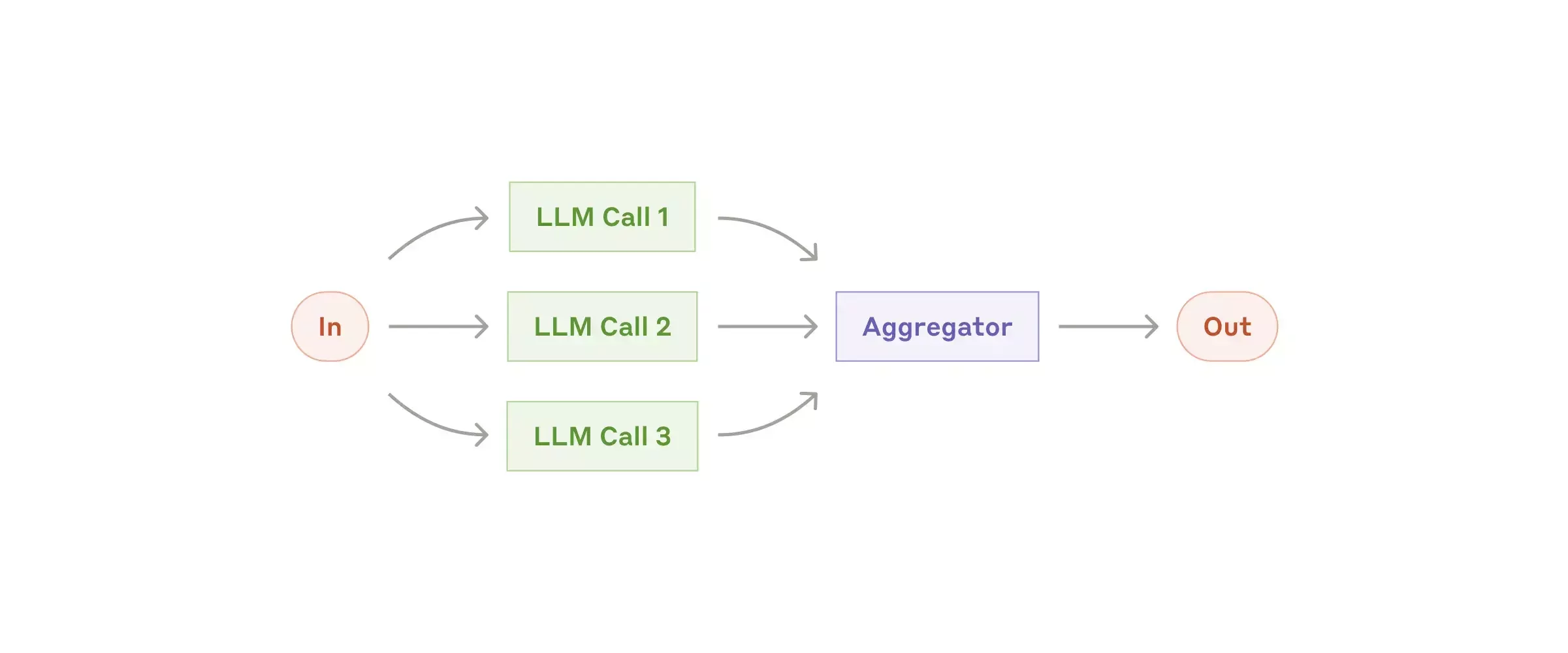

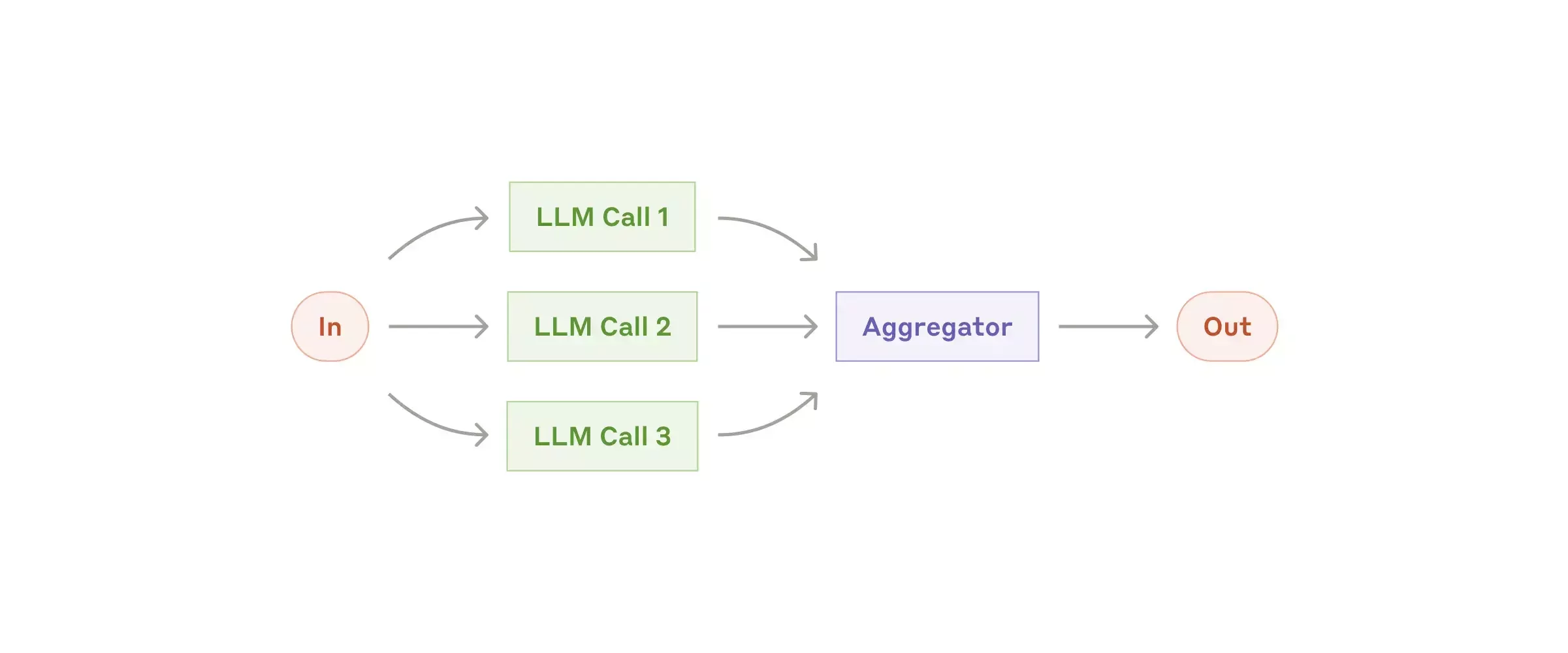

Parallelization

Sectioning

Parallelization: flavor 1

Voting

Parallelization: flavor 2

Concurrency

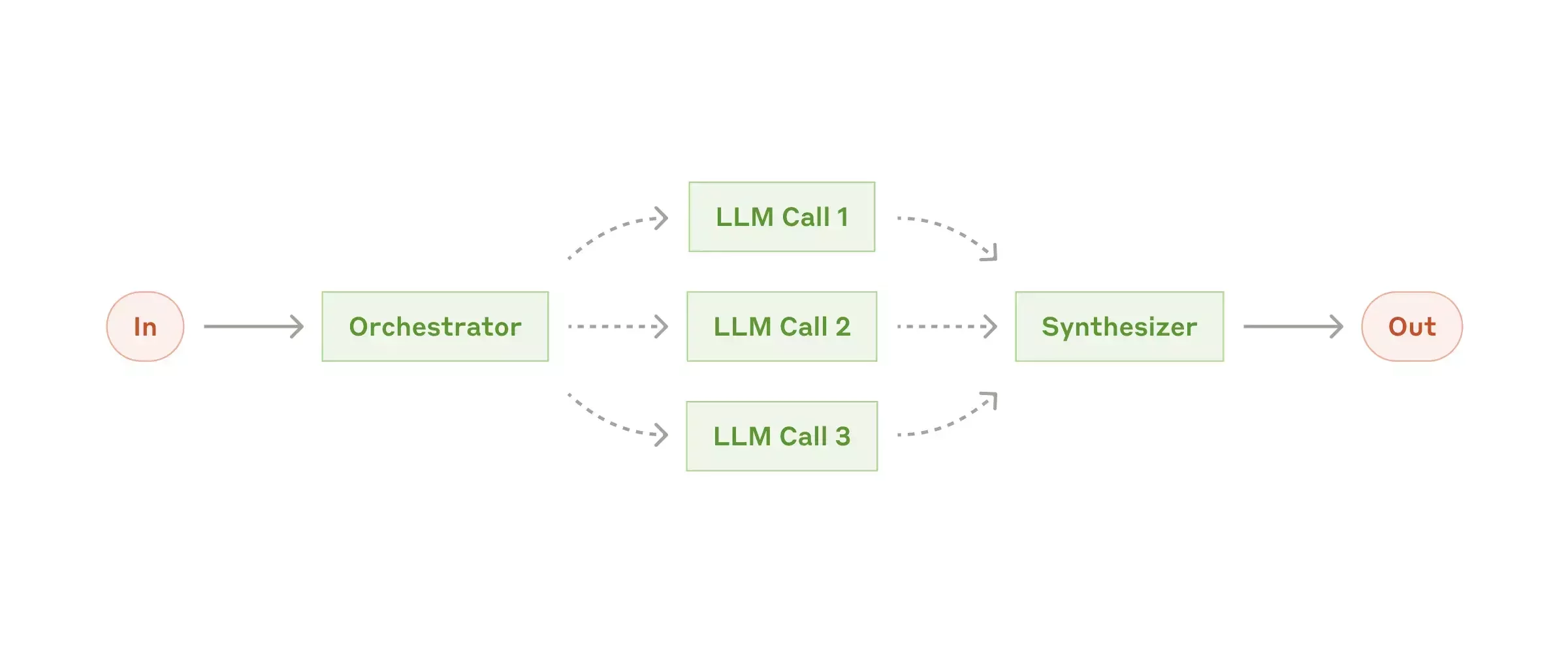

Orchestrator-Workers

Concurrency

(again)

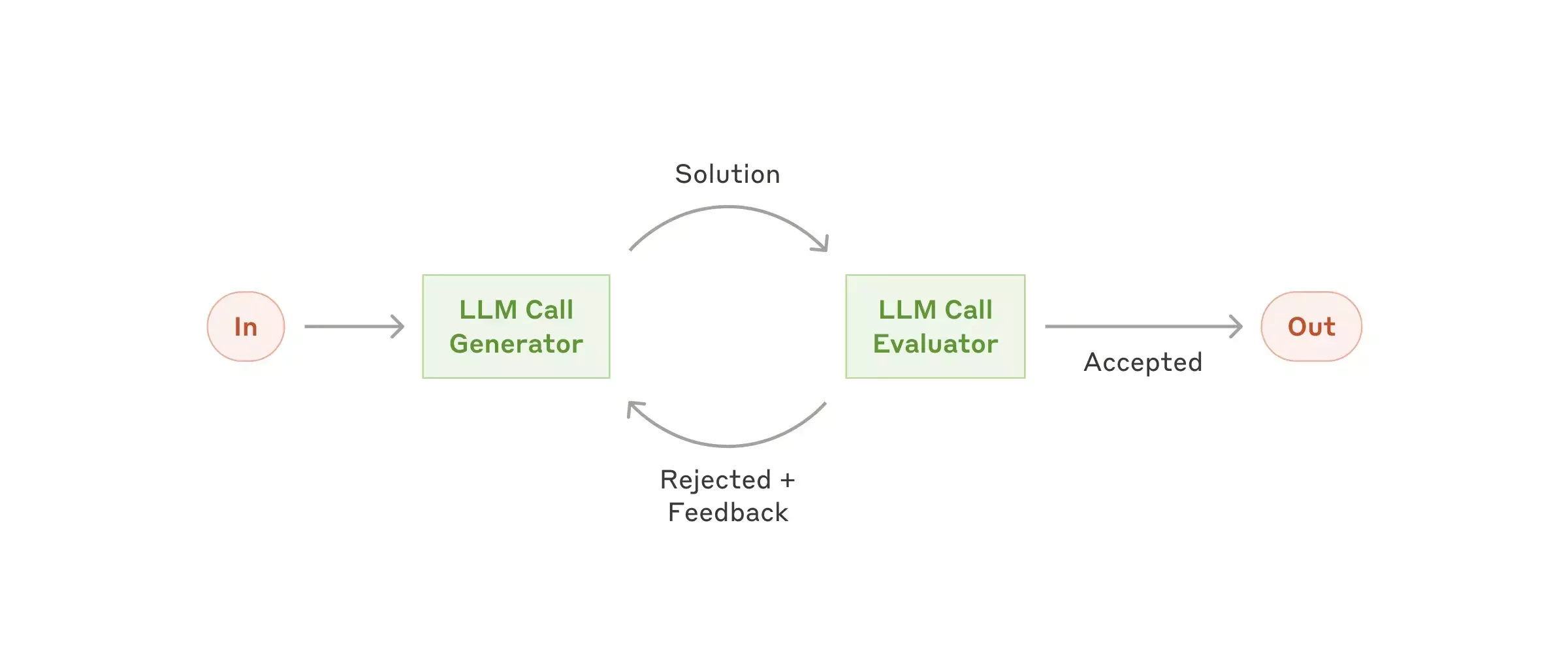

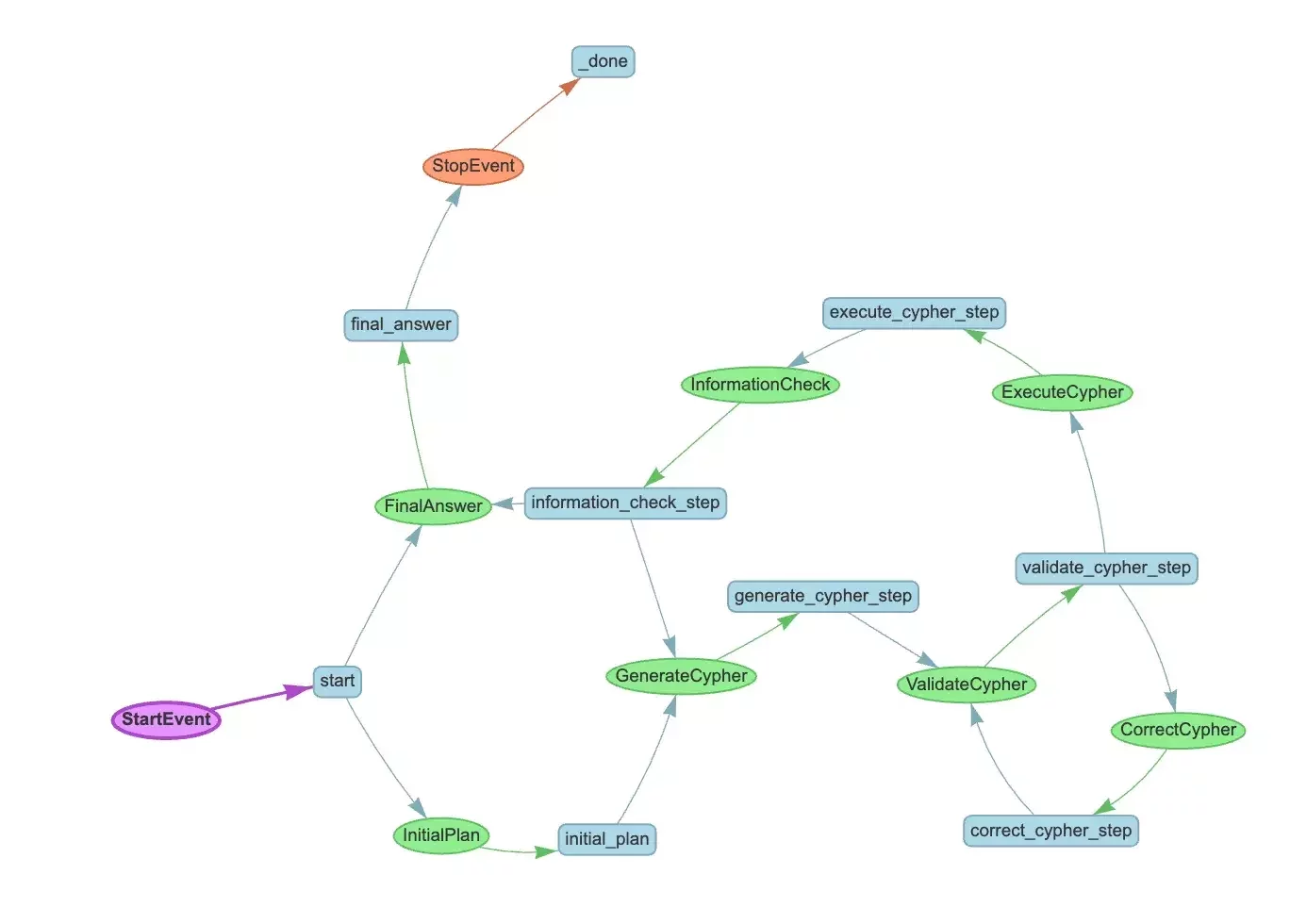

Evaluator-Optimizer

aka Self-reflection

Looping

Arbitrary complexity

Tool use

def multiply(a: int, b: int) -> int:

"""Multiply two integers and return the result."""

return a * b

FunctionAgent

agent = FunctionAgent(

tools=[multiply_tool],

llm=llm,

system_prompt="You are a helpful agent"

)

response = agent.chat("What is 3 times 4?")

Multi-agent systems

AgentWorkflow

research_agent = FunctionAgent(

name="ResearchAgent",

description="Useful for searching the web for information on a given topic and recording notes on the topic.",

system_prompt=(

"You are the ResearchAgent that can search the web for information on a given topic and record notes on the topic. "

"Once notes are recorded and you are satisfied, you should hand off control to the WriteAgent to write a report on the topic."

),

llm=llm,

tools=[search_web, record_notes],

can_handoff_to=["WriteAgent"],

)

Multi-agent system

as a one-liner

agent_workflow = AgentWorkflow(

agents=[research_agent, write_agent, review_agent],

root_agent=research_agent.name,

initial_state={

"research_notes": {},

"report_content": "Not written yet.",

"review": "Review required.",

},

)

Full AgentWorkflow and Workflows tutorial

Giving agents

access to data

with RAG

Basic RAG pipeline

RAG in 5 lines

documents = LlamaParse().load_data("./myfile.pdf")

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

response = query_engine.query("What did the author do growing up?")

print(response)

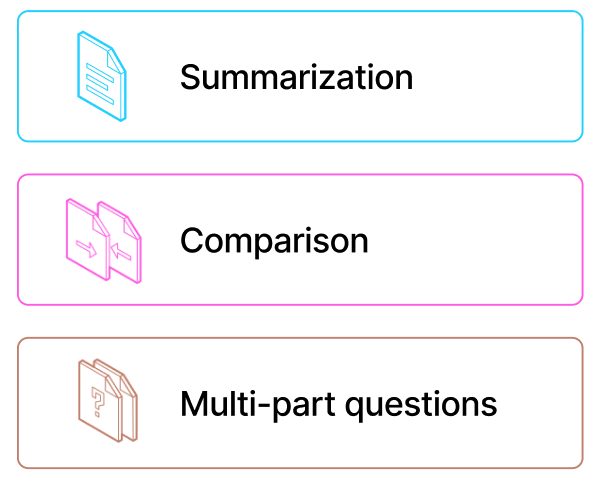

RAG limitations

Summarization

Solve it with: routing, parallelization

Comparison

Solve it with: parallelization

Multi-part questions

Solve it with: chaining, parallelization

What's next?

Thanks!

Follow me on BlueSky:

🦋 @seldo.com

Building real-world Agentic applications with LlamaIndex (ODSC East)

By Laurie Voss

Building real-world Agentic applications with LlamaIndex (ODSC East)

- 1,172