Imitation learning \(\Rightarrow\) foundation models

MIT 6.821: Underactuated Robotics

Spring 2024, Lecture 24

Follow live at https://slides.com/d/ssAmqBQ/live

(or later at https://slides.com/russtedrake/spring24-lec24)

Image credit: Boston Dynamics

Levine*, Finn*, Darrel, Abbeel, JMLR 2016

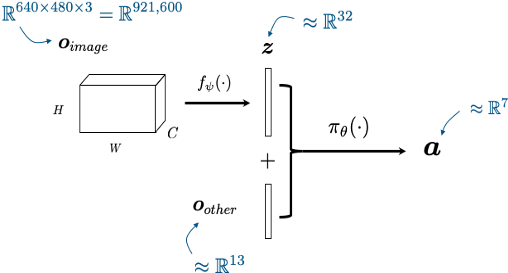

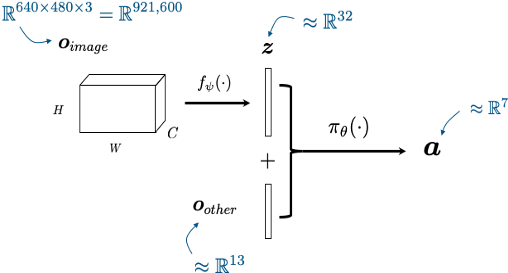

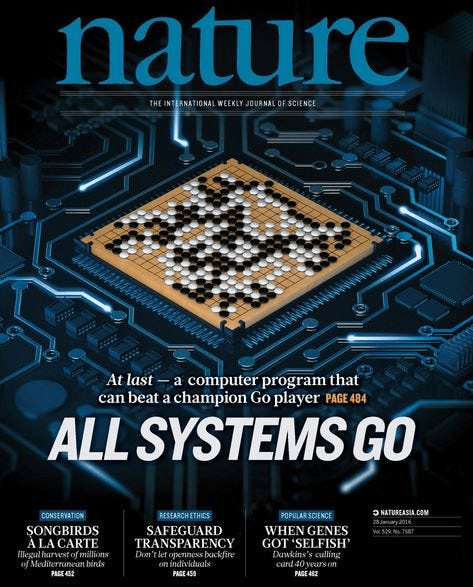

Key advance: visuomotor policies

perception network

(often pre-trained)

policy network

other robot sensors

learned state representation

actions

x history

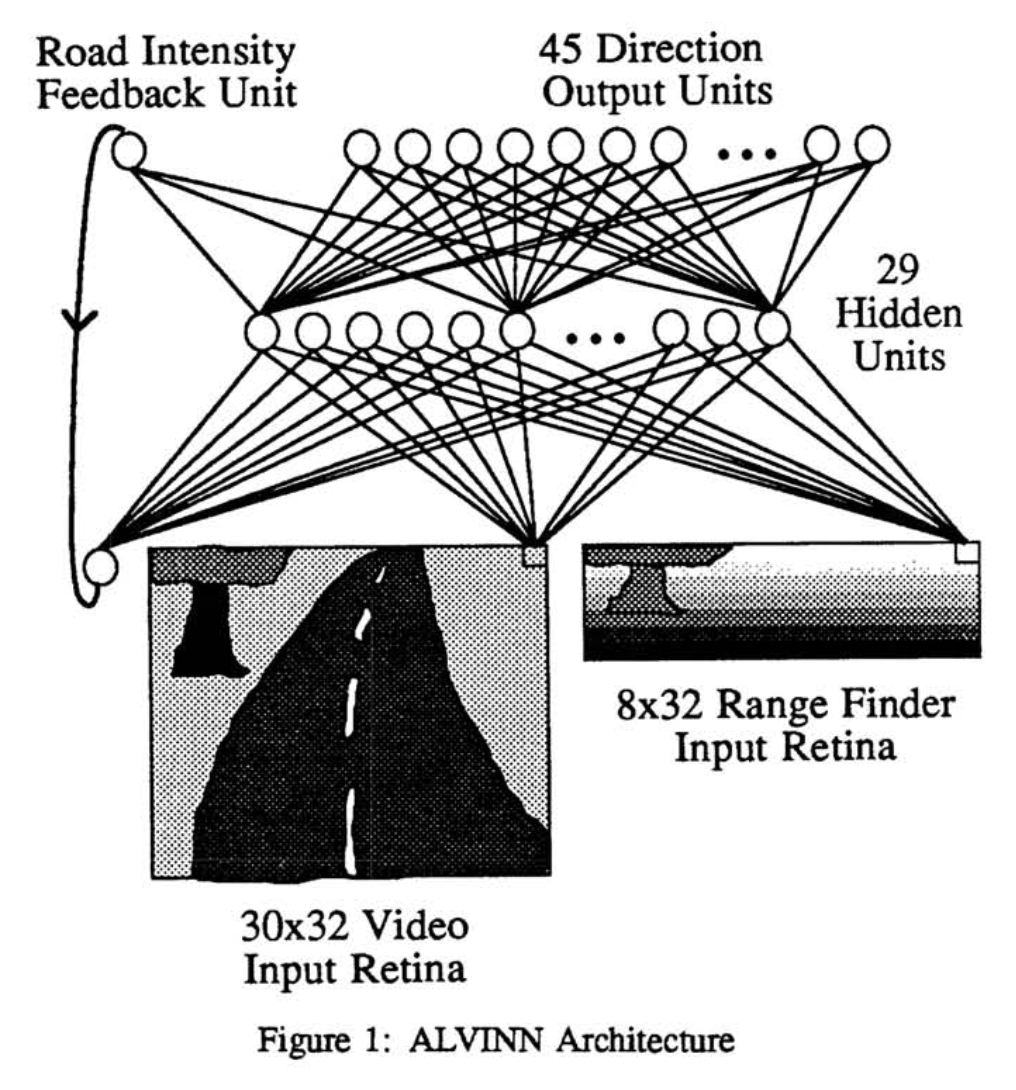

NeurIPS 1988

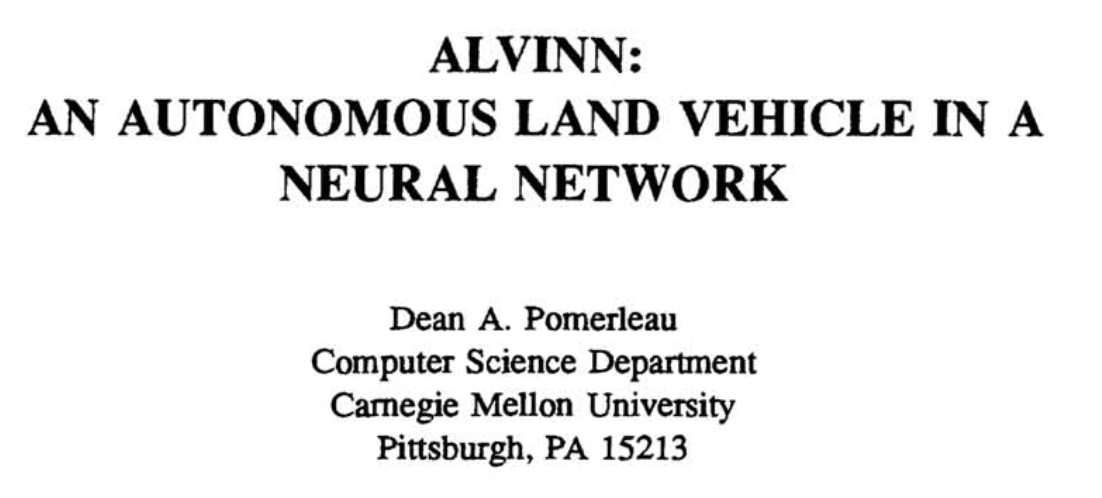

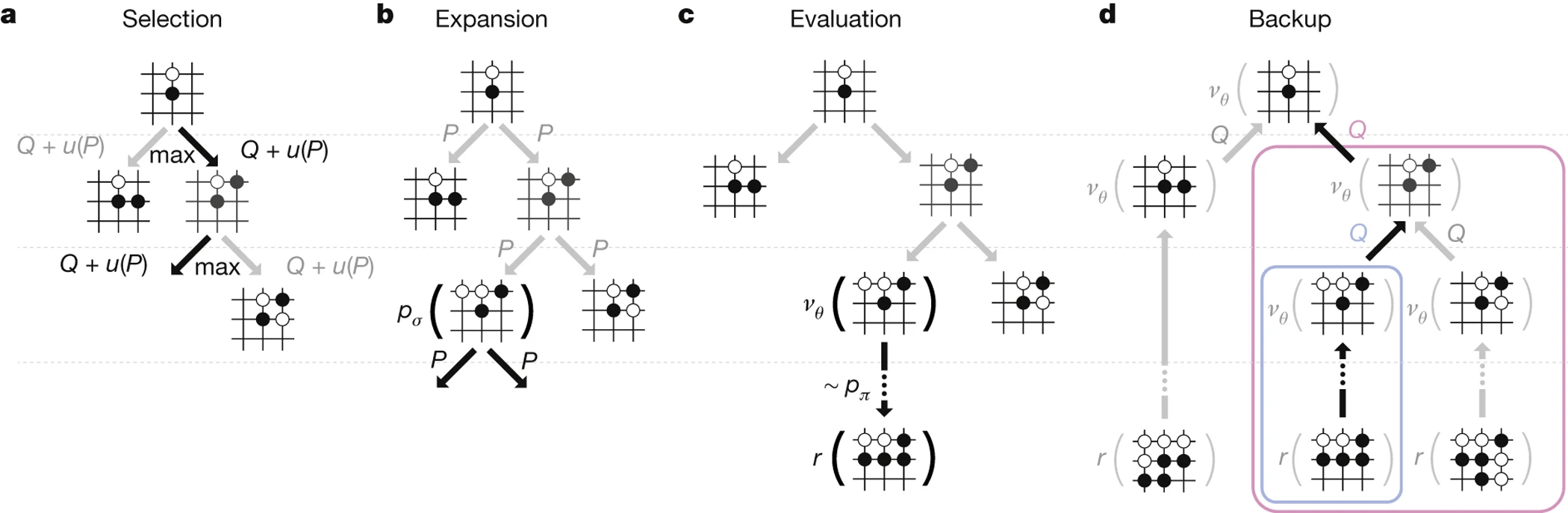

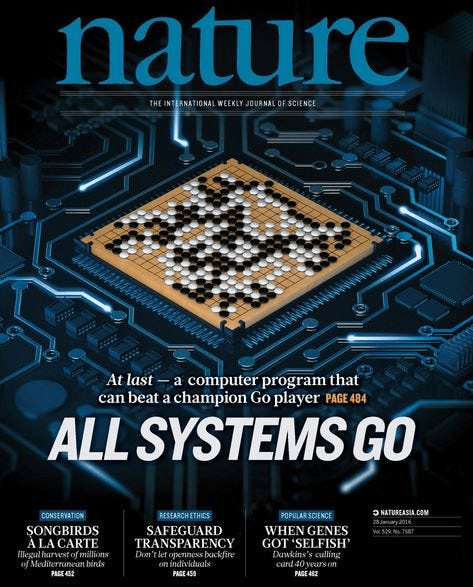

AlphaGo

- Step 1: Behavior Cloning

- from human expert games

- Step 2: Self-play

- Policy network

- Value network

- Monte Carlo tree search (MCTS)

A very simple teleop interface

Andy Zeng's MIT CSL Seminar, April 4, 2022

Andy's slides.com presentation

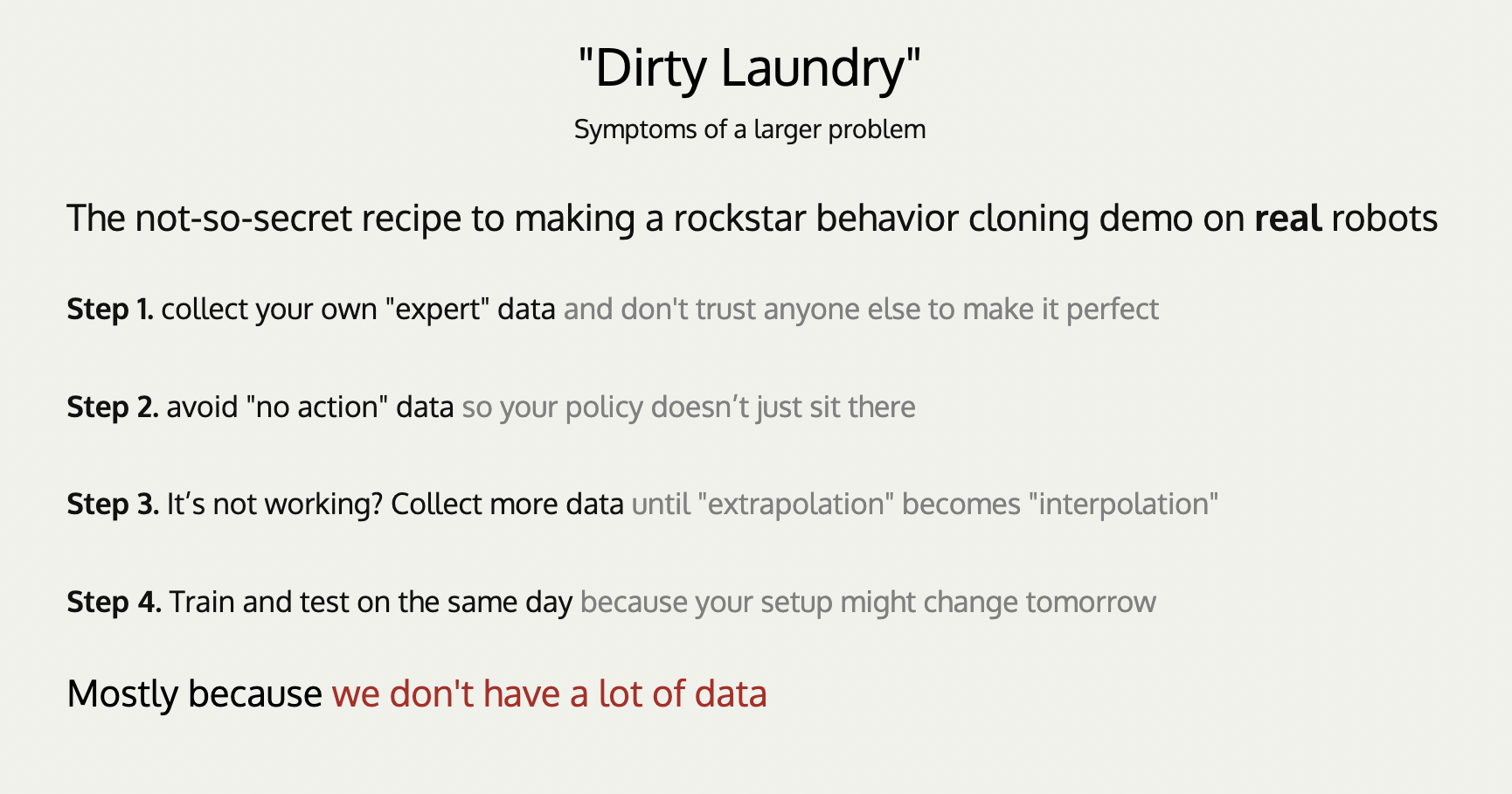

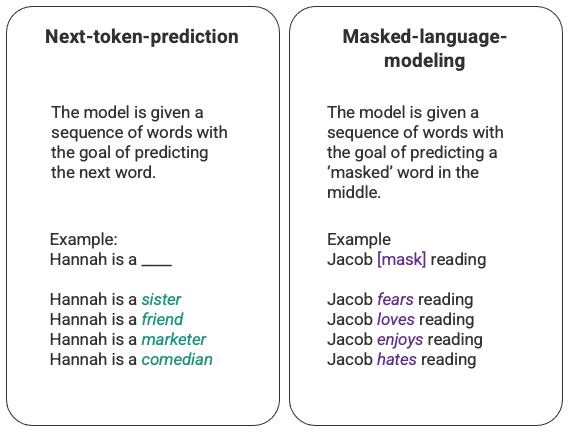

"Self-supervised" learning

Example: Text completion

No extra "labeling" of the data required!

GPT models are (also) trained with behavior cloning

But it's trained on the entire internet...

And it's a really big network

Generative AI for Images

Humans have also put lots of captioned images on the web

...

Dall-E 2. Tested in Sept, 2022

"A painting of a professor giving a talk at a robotics competition kickoff"

Input:

Output:

Dall-E 3. October 2023

"a painting of a handsome MIT professor giving a talk about robotics and generative AI at a high school in newton, ma"

Input:

Output:

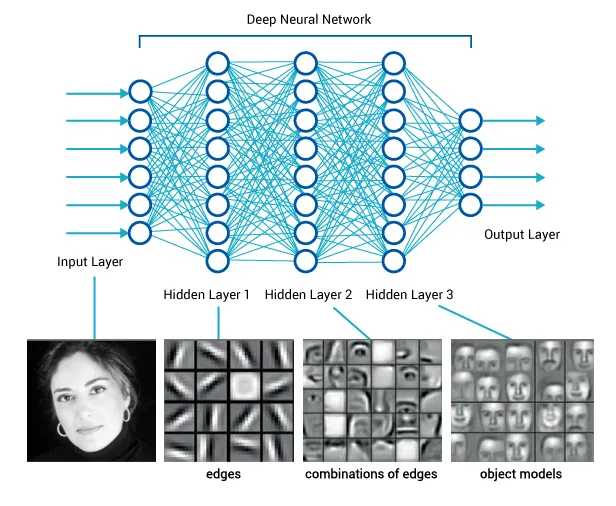

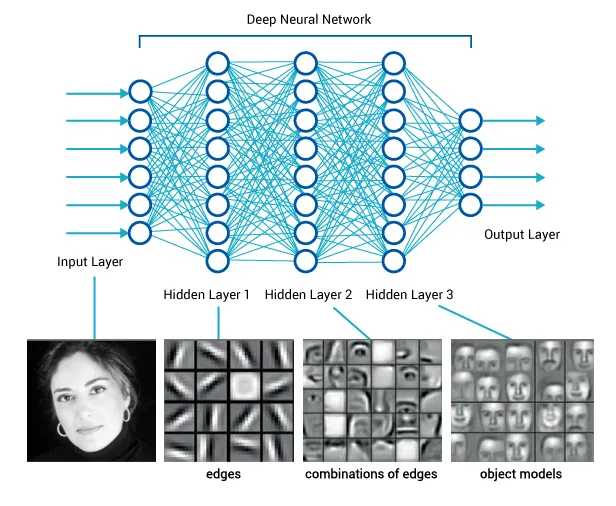

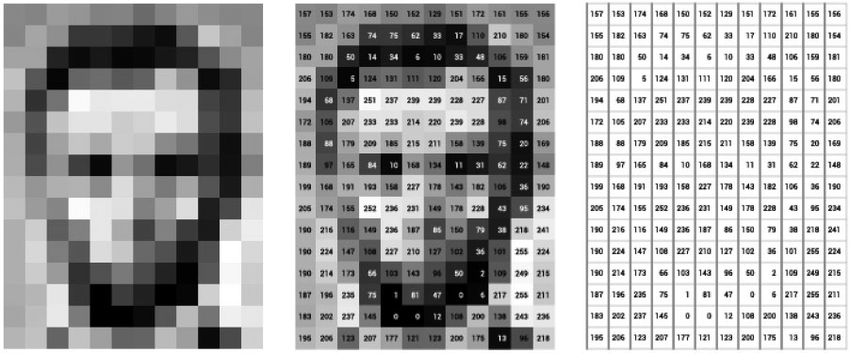

An image is just a list of numbers (pixel values)

Is Dall-E just next pixel prediction?

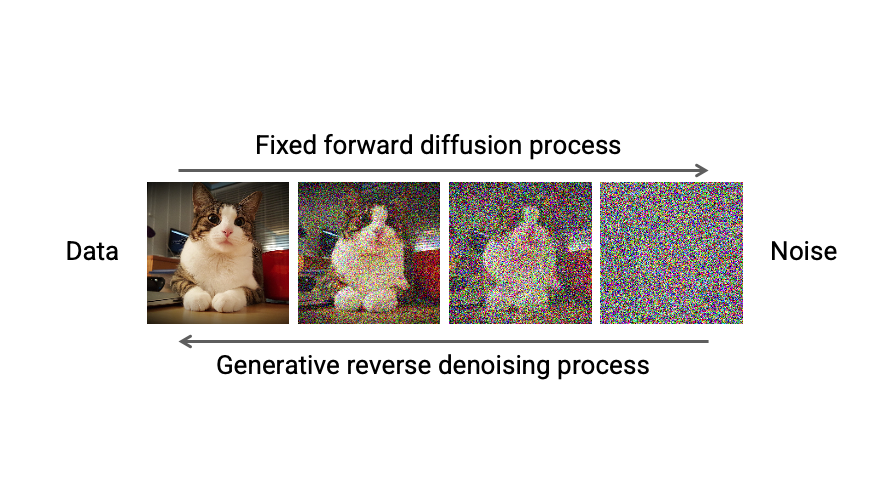

"Diffusion" models

great tutorial: https://chenyang.co/diffusion.html

great tutorial: https://chenyang.co/diffusion.html

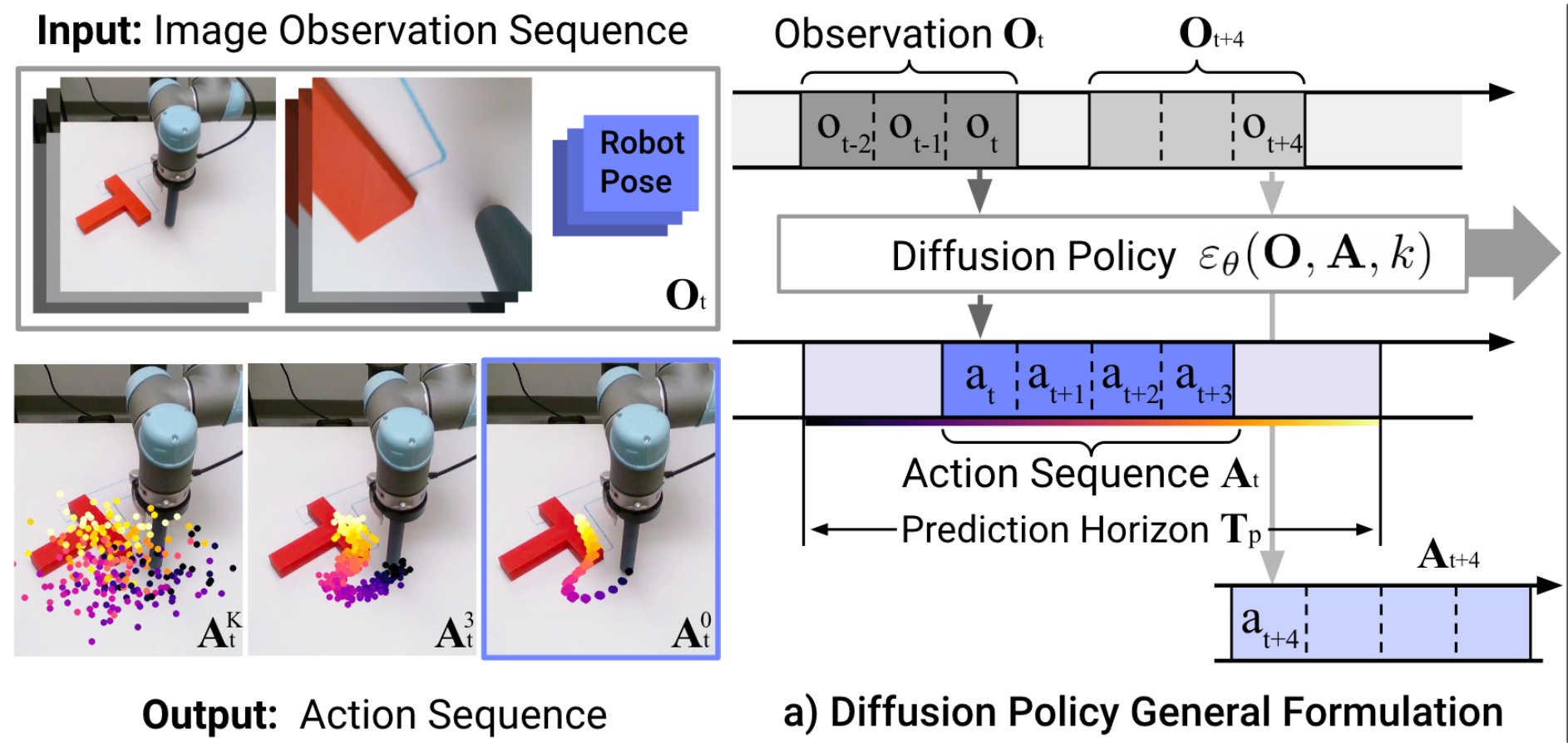

Image backbone: ResNet-18 (pretrained on ImageNet)

Total: 110M-150M Parameters

Training Time: 3-6 GPU Days ($150-$300)

Representing dynamic output feedback

input

output

Control Policy

(as a dynamical system)

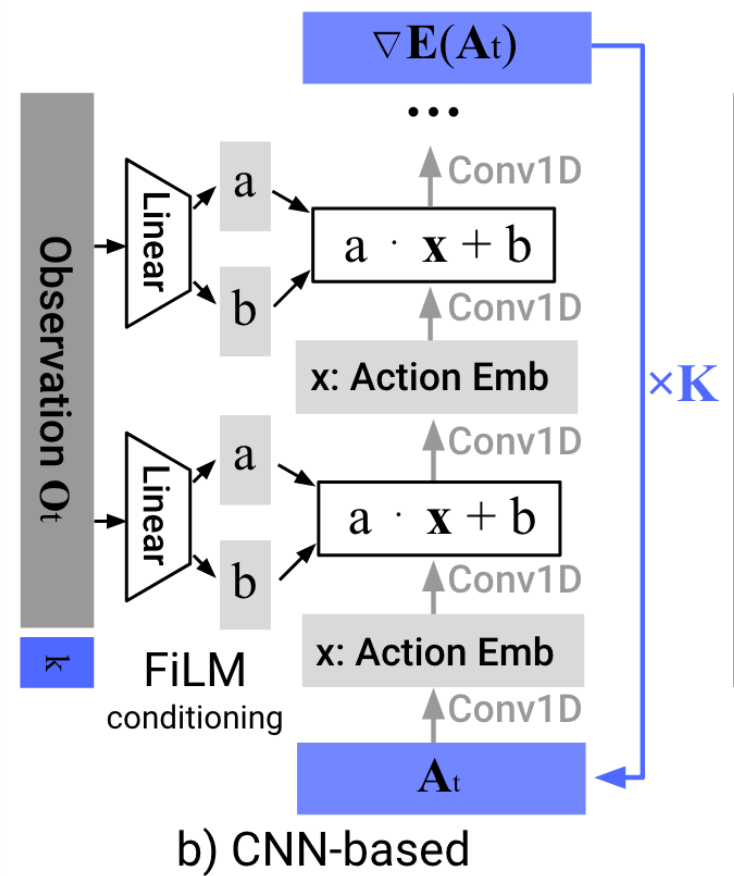

"Diffusion Policy" is an auto-regressive (ARX) model with forecasting

\(H\) is the length of the history,

\(P\) is the length of the prediction

Conditional denoiser produces the forecast, conditional on the history

(Often) Reactive

Discrete/branching logic

Long horizon

Limited "Generalization"

(when training a single skill)

a few new skills...

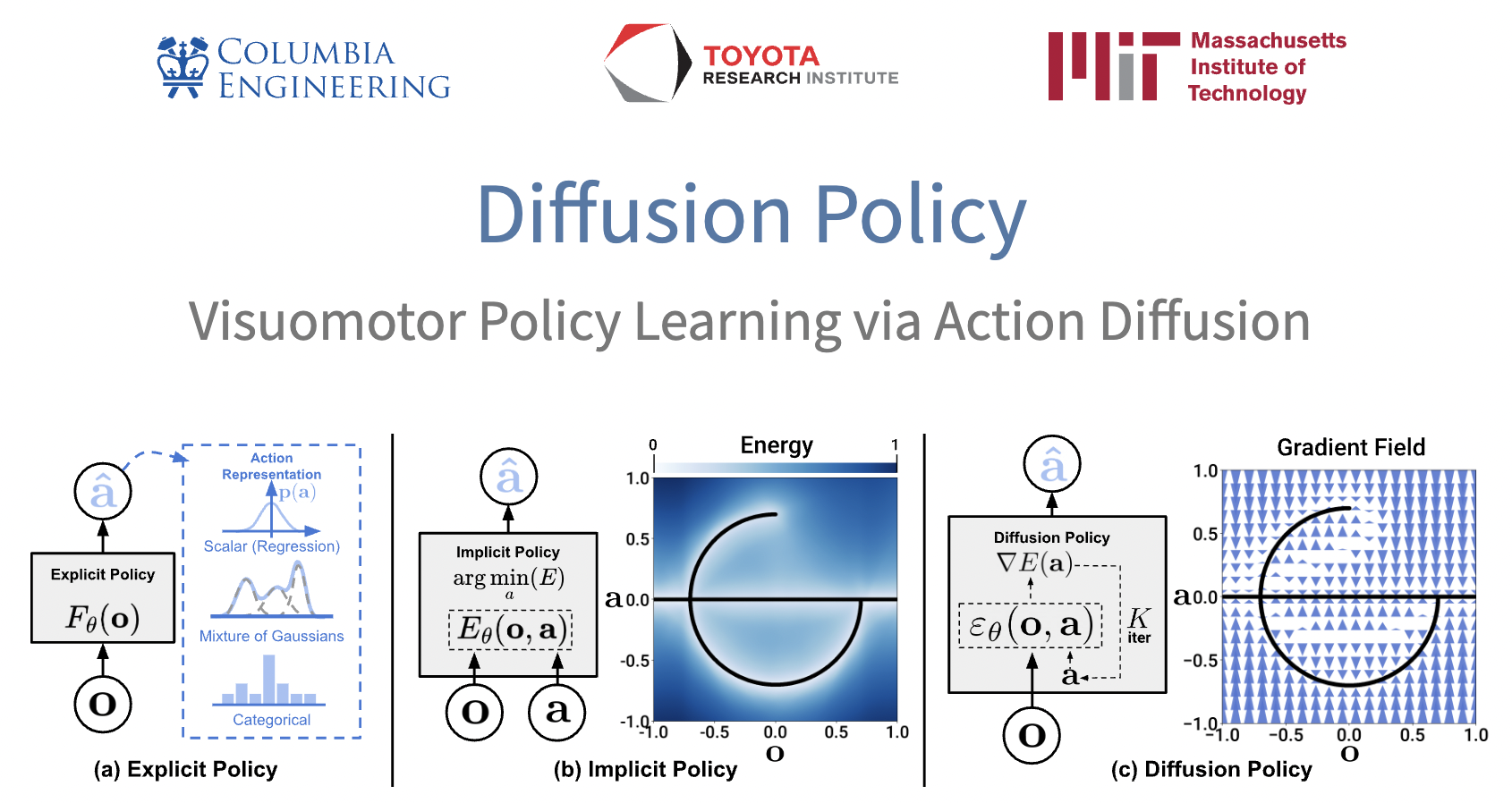

Why (Denoising) Diffusion Models?

- High capacity + great performance

- Small number of demonstrations (typically ~50-100)

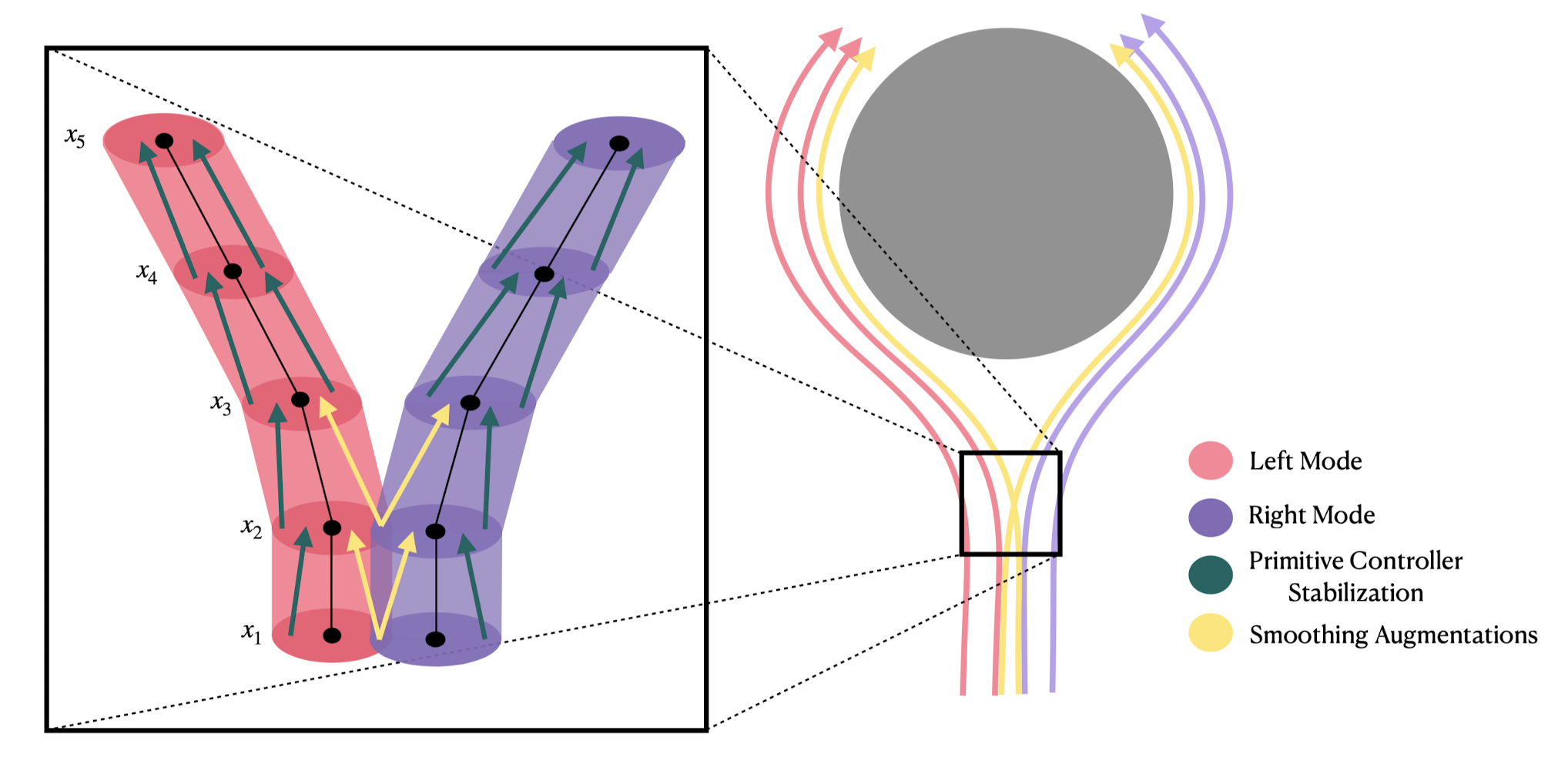

- Multi-modal (non-expert) demonstrations

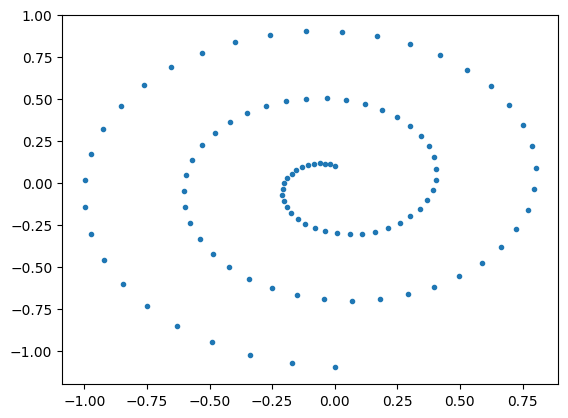

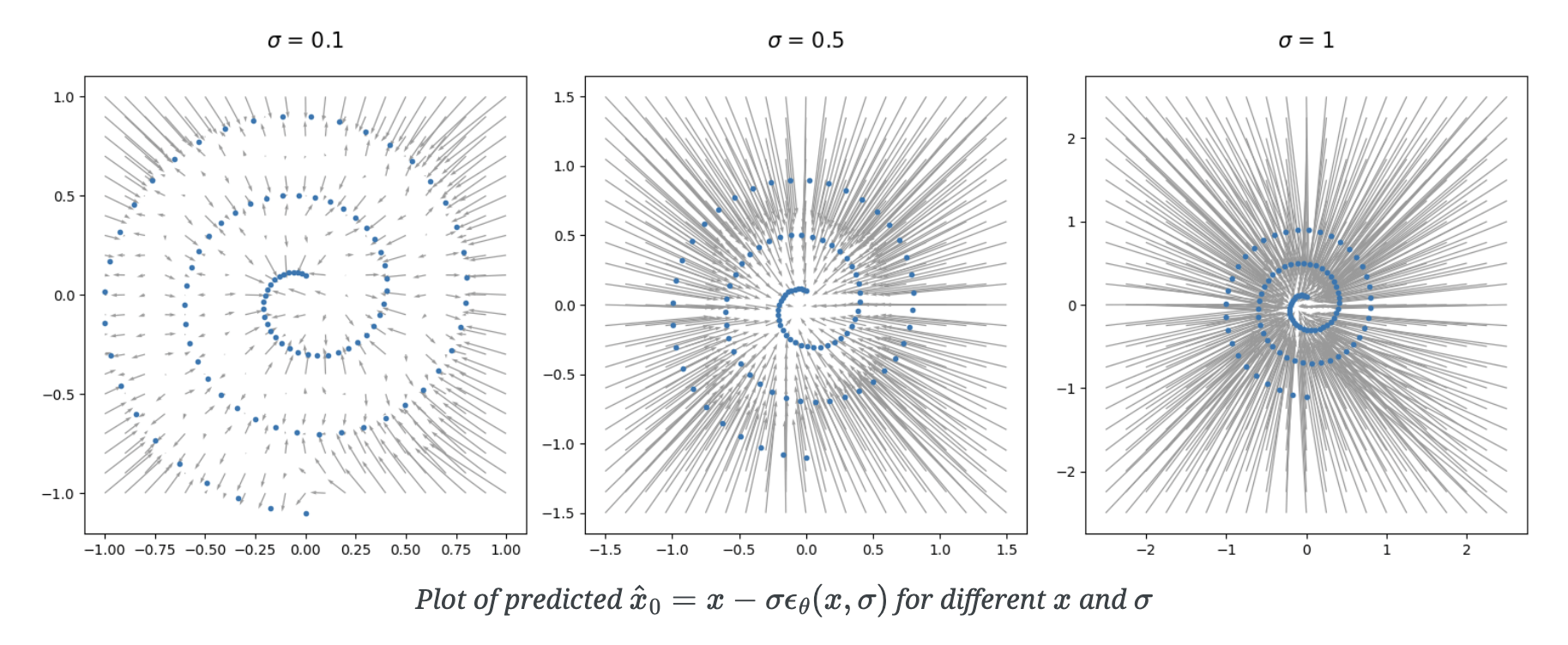

Learns a distribution (score function) over actions

e.g. to deal with "multi-modal demonstrations"

Learning categorial distributions already worked well (e.g. AlphaGo)

Diffusion helped extend this to high-dimensional continuous trajectories

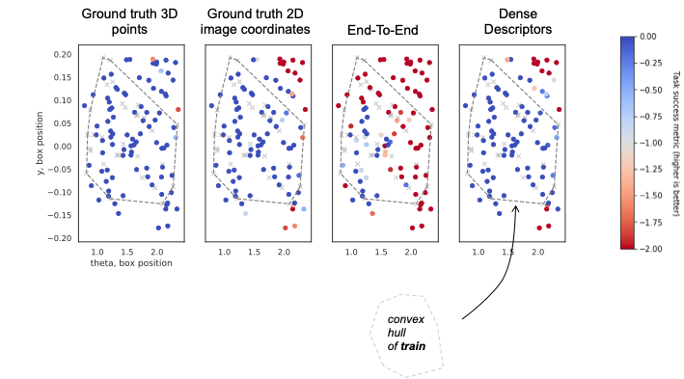

Distribution shift

Why (Denoising) Diffusion Models?

- High capacity + great performance

- Small number of demonstrations (typically ~50)

- Multi-modal (non-expert) demonstrations

- Training stability and consistency

- no hyper-parameter tuning

- Generates high-dimension continuous outputs

- vs categorical distributions (e.g. RT-1, RT-2)

- CVAE in "action-chunking transformers" (ACT)

- Solid mathematical foundations (score functions)

- Reduces nicely to the simple cases (e.g. LQG / Youla)

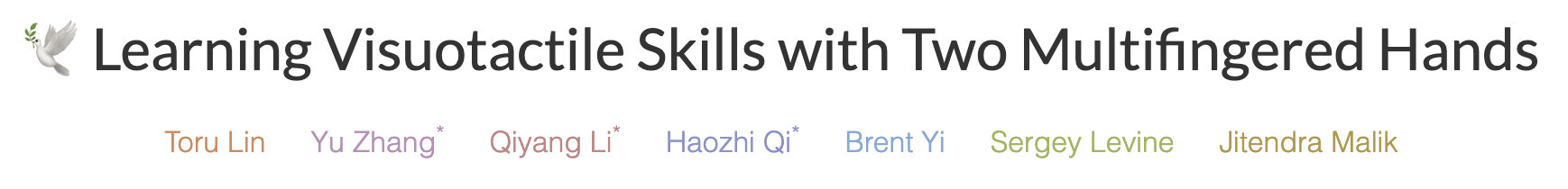

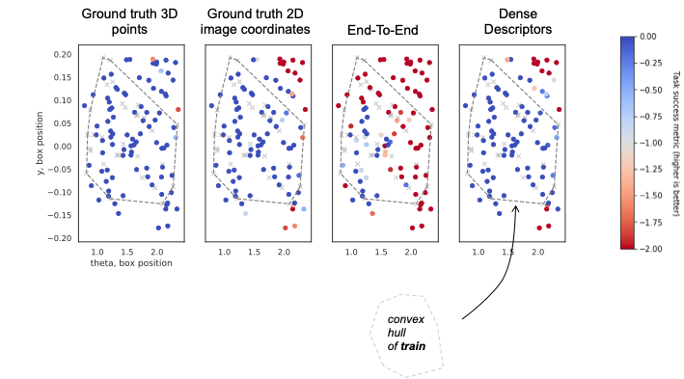

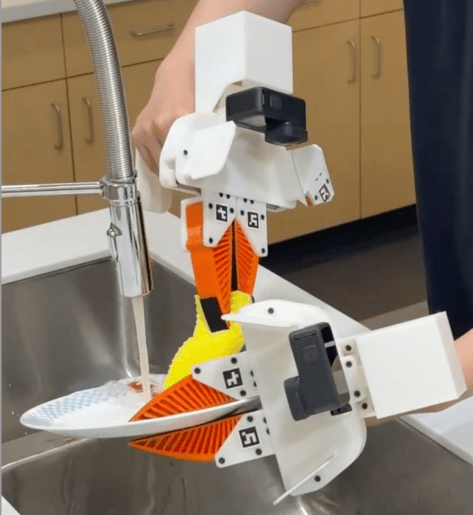

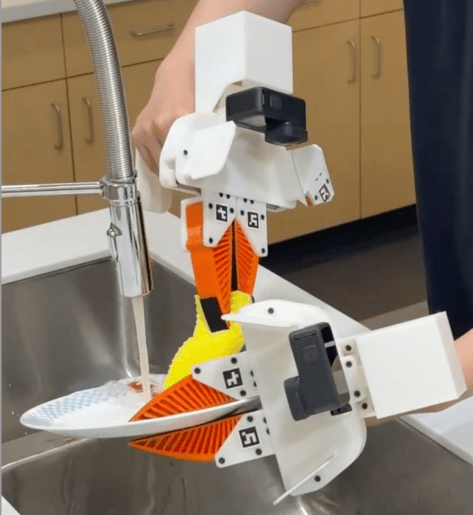

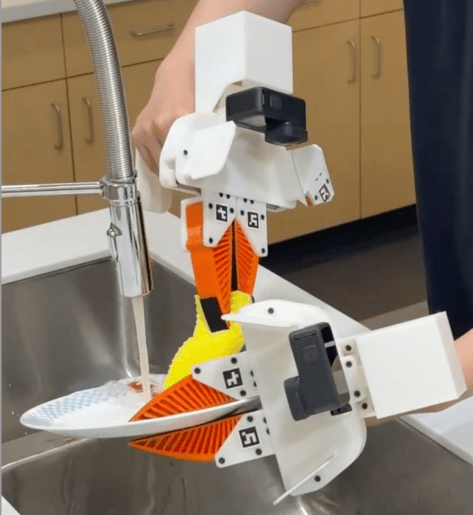

Diffusion Policy for Dexterous HANDs?

Enabling technologies

Haptic Teleop Interface

Excellent system identification / robot control

Visuotactile sensing

with TRI's Soft Bubble Gripper

Open source:

ALOHA

Mobile ALOHA

But there are definitely limits to the single-task models..

LLMs \(\Rightarrow\) VLMs \(\Rightarrow\) LBMs

large language models

visually-conditioned language models

large behavior models

\(\sim\) VLA (vision-language-action)

\(\sim\) EFM (embodied foundation model)

Q: Is predicting actions fundamentally different?

Why actions (for dexterous manipulation) could be different:

- Actions are continuous (language tokens are discrete)

- Have to obey physics, deal with stochasticity

- Feedback / stability

- ...

should we expect similar generalization / scaling-laws?

Success in (single-task) behavior cloning suggests that these are not blockers

Prediction actions is different

- We don't have internet scale action data (yet)

- We still need rigorous/scalable "Eval"

Prediction actions is different

- We don't have internet scale action data (yet)

- We need rigorous/scalable "Eval"

The Robot Data Diet

Big data

Big transfer

Small data

No transfer

robot teleop

(the "transfer learning bet")

Open-X

simulation rollouts

novel devices

Action prediction as representation learning

In both ACT and Diffusion Policy, predicting sequences of actions seems very important

Thought experiment:

To predict future actions, must learn

dynamics model

task-relevant

demonstrator policy

dynamics

Cumulative Number of Skills Collected Over Time

The (bimanual, dexterous) TRI CAM dataset

CAM data collect

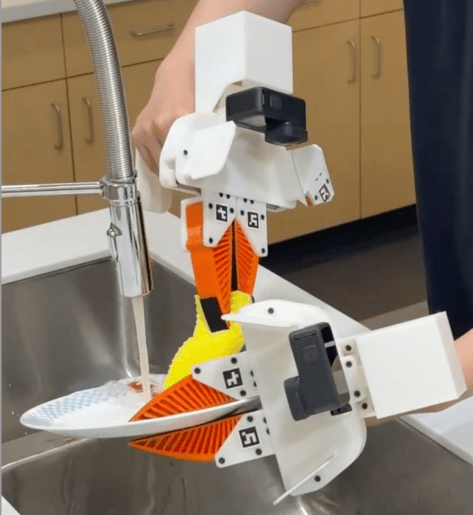

The DROID dataset

w/ Chelsea Finn and Sergey Levine

The Robot Data Diet

Big data

Big transfer

Small data

No transfer

robot teleop

(the "transfer learning bet")

Open-X

simulation rollouts

novel devices

w/ Shuran Song

The Robot Data Diet

Big data

Big transfer

Small data

No transfer

robot teleop

(the "transfer learning bet")

Open-X

simulation rollouts

novel devices

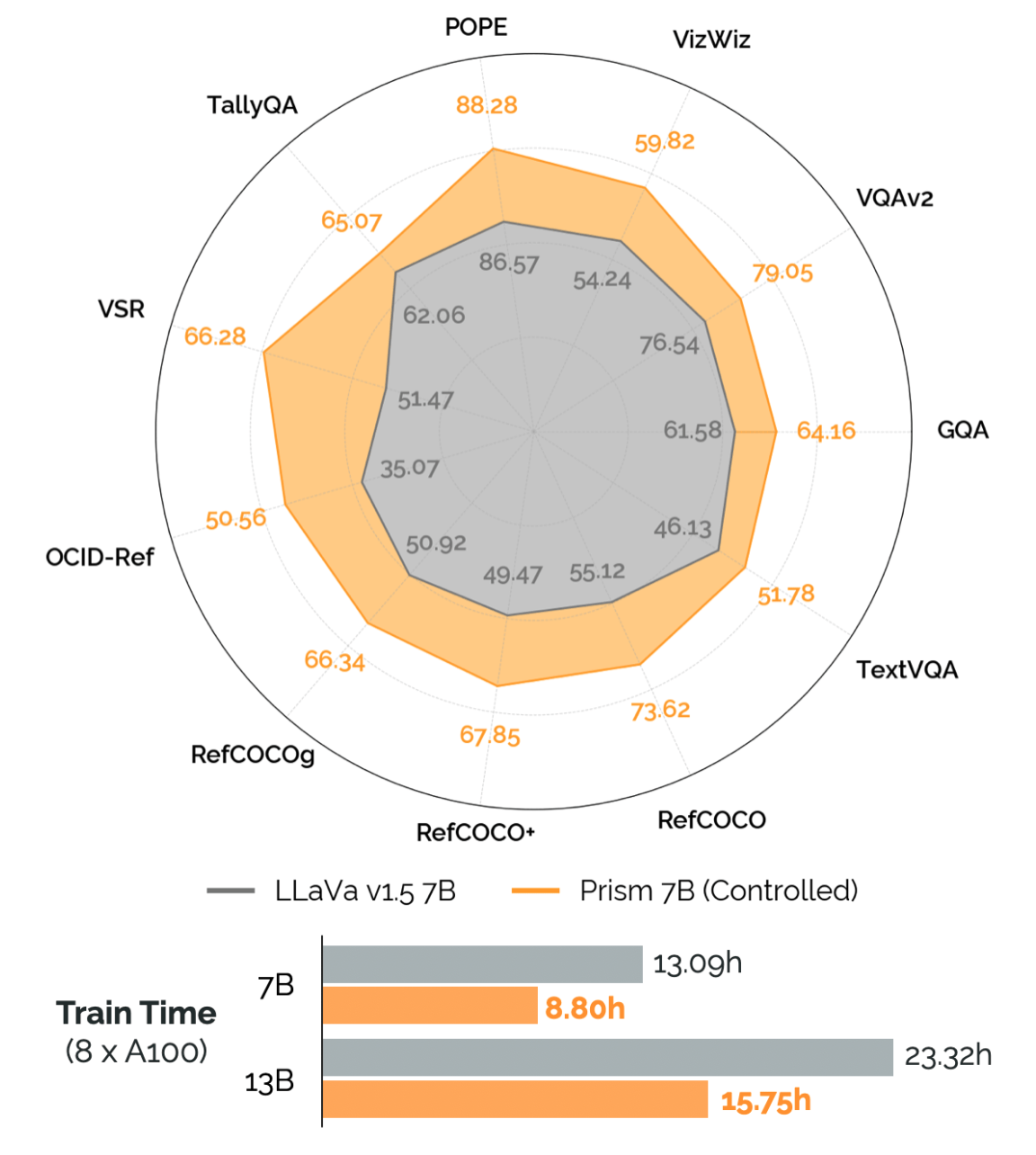

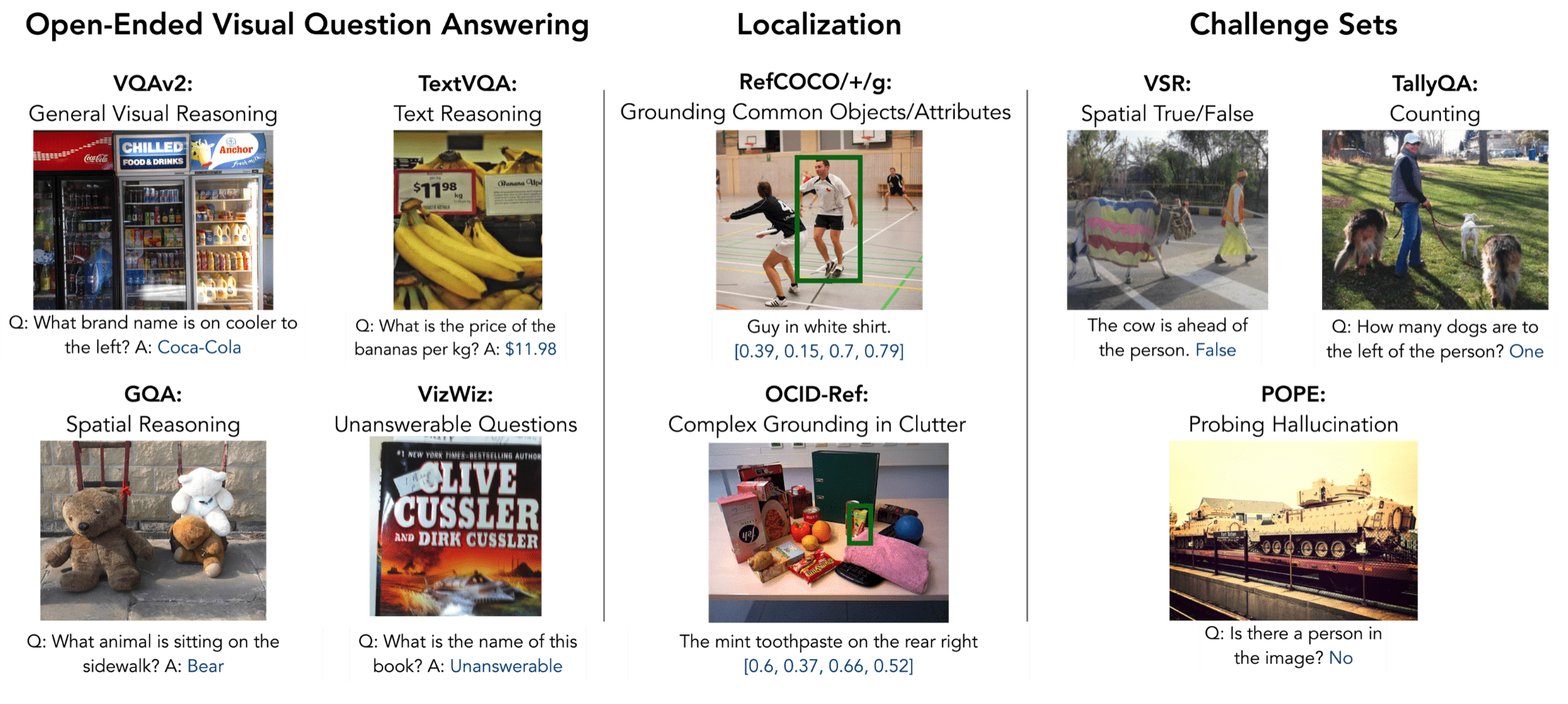

Prismatic VLMs

w/ Dorsa Sadigh

Fine-grained evaluation suite across a number of different visual reasoning tasks

Prismatic VLMS \(\Rightarrow\) Open-VLA

Video Diffusion

w/ Carl Vondrick

This is just Phase 1

Enough to make robots useful (~ GPT-2?)

\(\Rightarrow\) get more robots out in the world

\(\Rightarrow\) establish the data flywheel

Then we get into large-scale distributed (fleet) learning...

The AlphaGo Playbook

- Step 1: Behavior Cloning

- from human expert games

- Step 2: Self-play

- Policy network

- Value network

- Monte Carlo tree search (MCTS)

Scaling Monte-Carlo Tree Search

"Graphs of Convex Sets" (GCS)

Prediction actions is different

- We don't have internet scale action data (yet)

- We need rigorous/scalable "Eval"

Eval with real robots (it's hard!)

Example: we asked the robot to make a salad...

Eval with real robots

Rigorous hardware eval (Blind, randomized testing, etc)

But in hardware, you can never run the same experiment twice...

Simulation Eval / Benchmark

Wrap-up

A foundation model for manipulation, because...

- start the data flywheel for general purpose robots

- unlock the new science of visuomotor "intelligence" (with aspects that can only be studied at scale)

Some (not all!) of these basic research questions require scale

There is so much we don't yet understand... many open algorithmic challenges