SANS

GERARD

Google Developer Expert

Developer Evangelist

International Speaker

Spoken 200 times in 43 countries

Alien cookbook

featuring Kang and Kodos

A tale of missing context and language nuance

FOR

HOW TO

HOW TO

HOW TO

HOW TO

Google AI Learning Path

Vertex AI

Complexity

Features

1

2

Gemini models landscape

Gemini for Open Source: Gemma

Responsible AI

Reduce Biases

Safe

Accountable to people

Designed with Privacy

Scientific Excellence

Follow all Principles

Socially Beneficial

Not for Surveillance

Not Weaponised

Not Unlawful

Not Harmful

GoogleAI

VertexAI

Pro 1.0

Pro 1.5

Ultra 1.0

Nano 1.0

New multi-modal architecture

(MoE)

1 Million tokens

Gemini 1.0 Pro 128K tokens

(100 pages)

Gemini 1.5 Pro 1M

(800 pages)

1h

video

11h

audio

30K

LOC

800

pages

1 Million tokens

Text: Apollo 11 script

402-pages

Video: Buster Keaton movie

44-minutes

Code: three.js demos

100K-LOC

A new age of AI-driven OSS

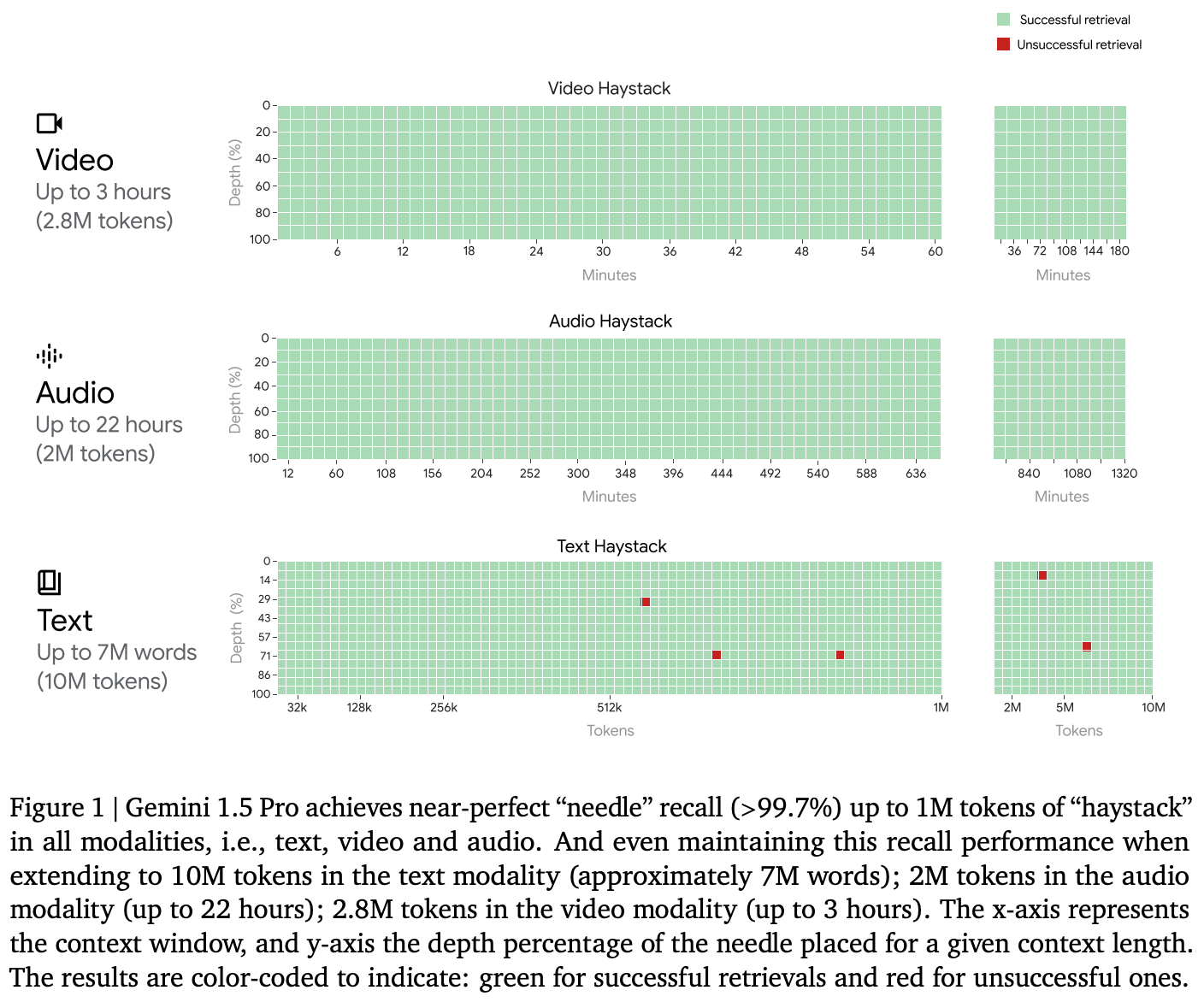

Exceptional Recall for up to 10M

RAG vs Massive Context

The Power of Context

Self-contained

Only what you need. Avoids fragmentation and relevance drift

Cost-effective

Lower investment over time, fixed query latency. No fixed costs

User-guided

Unified data for focus, easier to control and improve quality

Interpretable

Improved transparency, easier to debug & iterate

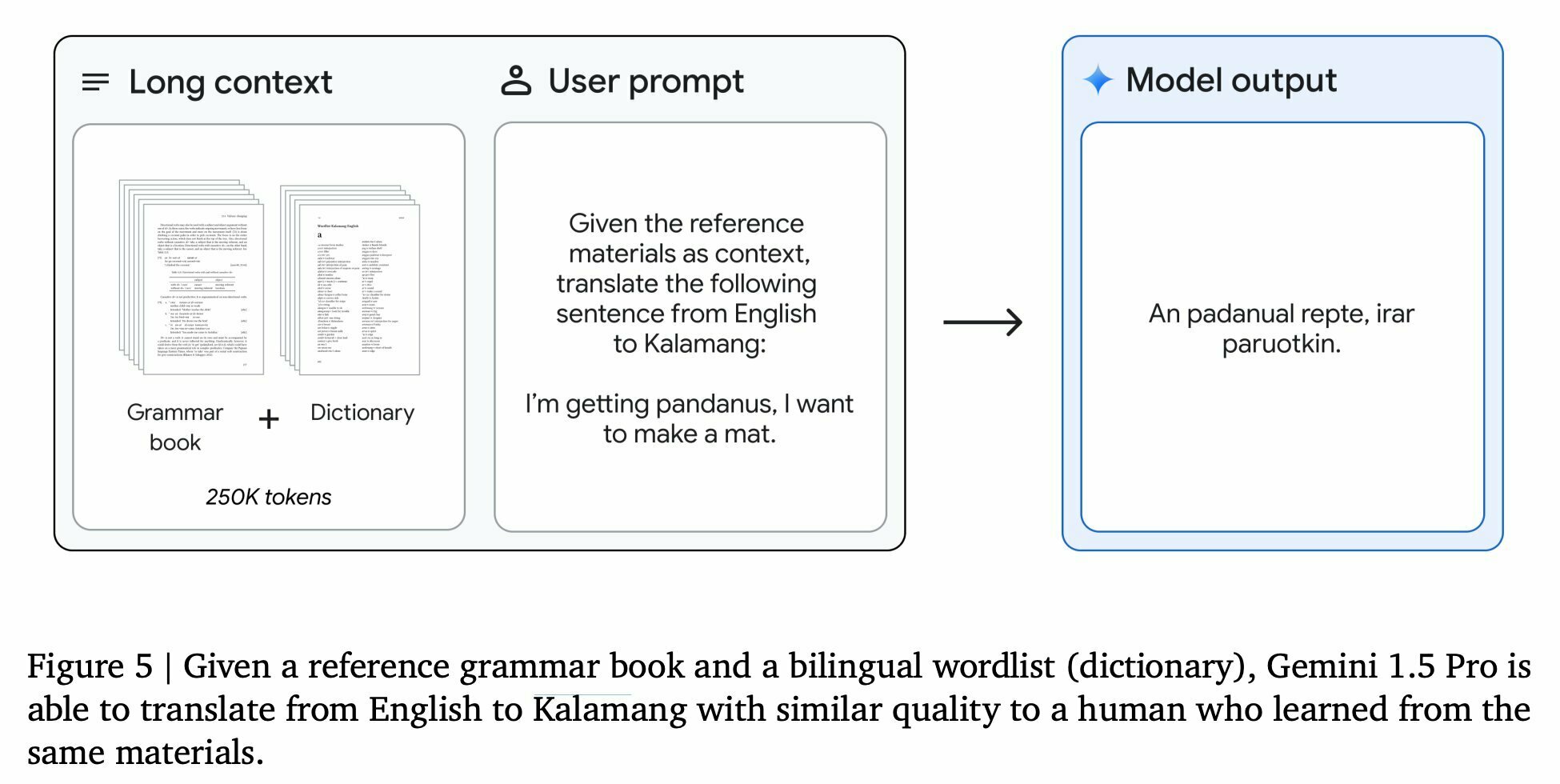

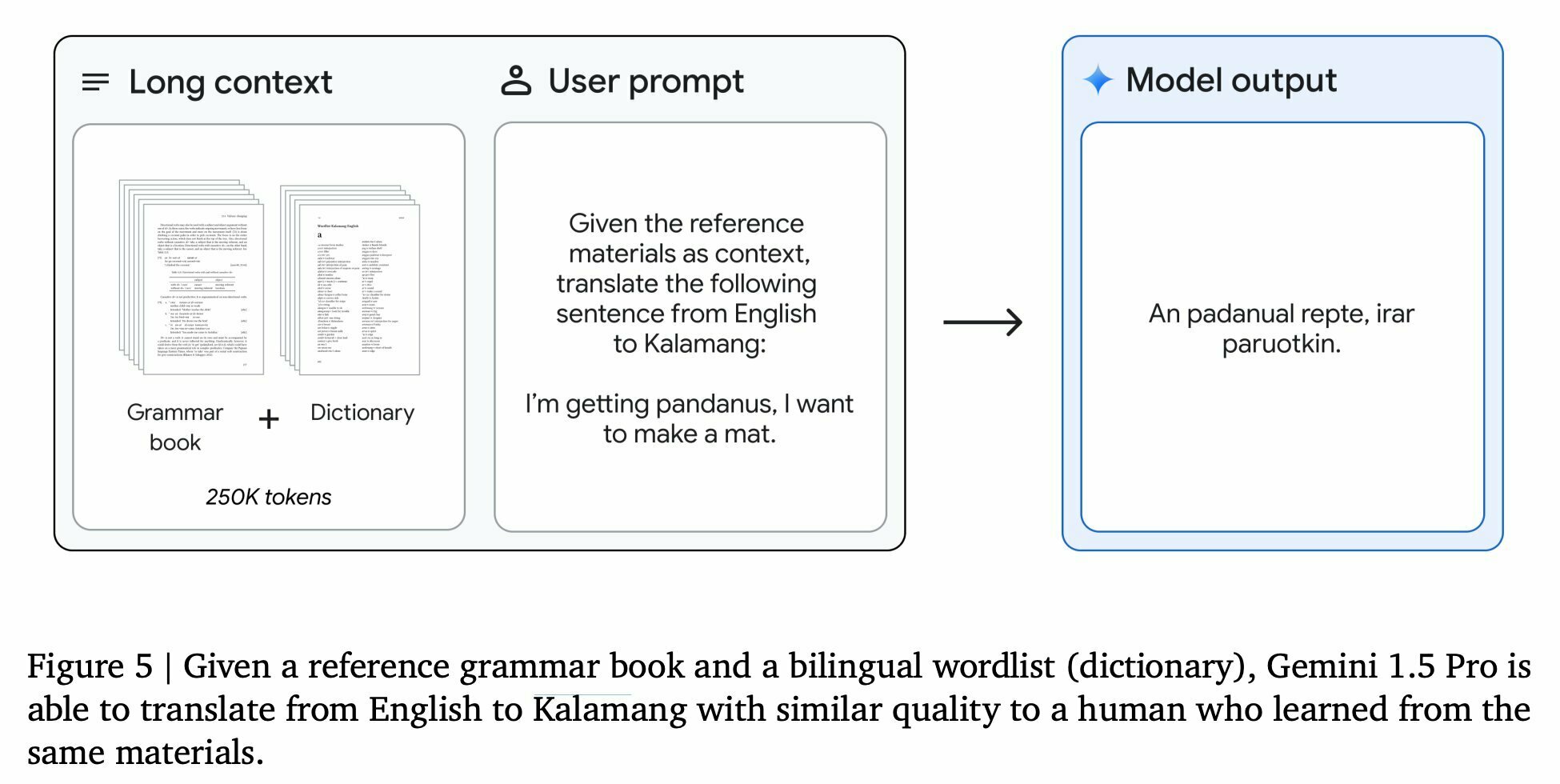

Extreme In-Context-Learning

English to Kalamang

Limited

Your sandbox for prompts

The Power of Context: Exploring Google Gemini 1.5

By Gerard Sans

The Power of Context: Exploring Google Gemini 1.5

In this talk, get an exclusive first look at Google's groundbreaking Gemini 1.5 in action! This multimodal language model features a Mixture of Experts (MoE) architecture and a revolutionary 1 million token context window, allowing it to understand complex inputs with exceptional depth. We'll explore live demos showcasing how this translates to vastly improved AI assistance for users and its impact on RAG systems.

- 3,605